MNIST CNN Layer Analysis

MNIST CNN Layer Analysis

Overview

This project investigates how adding convolutional layers to a neural network affects both accuracy and complexity for handwritten digit classification using the MNIST dataset. Conducted as part of a Digital Image Processing course, the work compares multiple architectures from a simple fully connected model to deeper CNNs, examining the trade-offs between test performance and parameter count.

Motivation

In deep learning, increasing model depth can enhance performance—but often at the cost of computational complexity. This project was designed to provide an intuitive, visual analysis of how convolutional layers influence model expressiveness, accuracy, and efficiency when applied to a classic classification problem.

Technical Approach

- Dataset: MNIST (60,000 training, 10,000 test images of size 28×28 grayscale)

- Preprocessing: Normalization, one-hot encoding, reshaping to include channel dimension

- Architectures Compared:

Baseline_1FC: Fully connected (Dense) layer onlyModel_1Conv: One Conv2D + MaxPooling2D blockModel_2Conv: Two Conv2D + MaxPooling2D blocks

- Training Setup:

- Optimizer: Adam

- Loss: Categorical cross-entropy

- Epochs: 5

- Batch size: 32

- Evaluation:

- Accuracy on test set

- Total trainable parameters

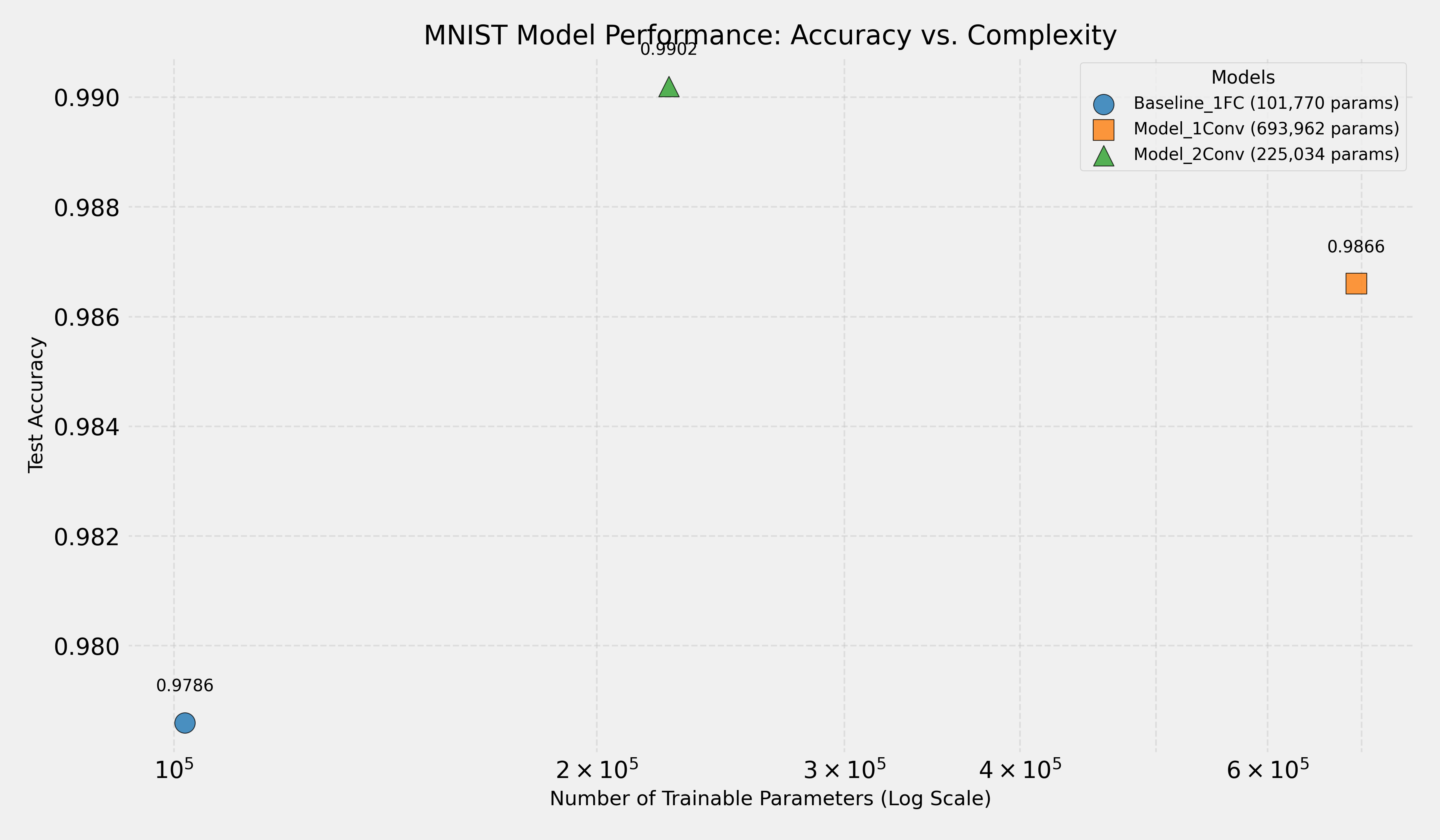

- Scatter plot of Accuracy vs. Parameter Count (log scale)

Key Features / Contributions

- Designed a minimal yet structured experiment to isolate the impact of architectural depth

- Implemented and evaluated three progressively complex models under identical training conditions

- Produced clear visualizations and tabulated metrics to guide architectural insights

- Interpreted results in terms of performance trade-offs and parameter efficiency

Results & Findings

| Model | Test Accuracy | Trainable Parameters |

|---|---|---|

| Baseline_1FC | 0.9780 | 101,770 |

| Model_1Conv | 0.9853 | 693,962 |

| Model_2Conv | 0.9893 | 225,034 |

- The first Conv layer significantly improved accuracy, though with a sharp increase in parameter count.

- The second Conv layer provided even better accuracy while reducing parameters—thanks to pooling reducing the spatial resolution before the dense layers.

Reflection

This project clarified how deeper CNNs can improve performance while potentially reducing over-parameterization through better architectural design. It strengthened my understanding of convolutional operations, parameter budgeting, and trade-off analysis in model development—key skills in building efficient deep learning systems.